Our Human Readable Sitemap

What are sitemaps and why do I need two?

In their simplest forms, Sitemaps are a one-page summary of your website's pages, allowing a visitor to avoid using drop-down menus or navigate through a series of links to find a particular page or blog.

However, as search engines have developed more complex tools for finding website content, the website visitor can be either Human or a Machine (specifically a crawler bot). The way in which Humans and Machines interpret the formatting, language and layout are quite different and therefore, it is now a strong recommendation, if not a requirement, to create both a Human Readable and Machine Readable summary of your website.

This page, spefically the section above, is an example of a Human Readable format, providing plain text links and clearly defined formatting to make finding content across the site easy. Read on below to learn about XML and generating XML files for machine reading.

XML Sitemaps for Google Indexing

Search Engine Optimisation is all about optimising your website to ensure that it ranks highly for searches relevant to your searches. This usually relates to Organic traffic, whereby site visitors find you without the use of digital advertising due to your site content being highly relevant to their search terms.

In order to determine relevancy, Google uses a number of clever algorithms to match your website's content, both written and visual, to the search term(s) inputted by the potential site visitor. To learn what content you have on your website, Google employs the use of automated crawler bots, which continuously navigate the internet searching for content and noting when the content has been edited, updated or removed.

In additional to a Human Readable (plain text) map such as this page, good SEO practice includes generating an Extensible Markup Language (XML) sitemap and submitting it to Google's Webmaster program, Search Console.

Learn more about Google's requirements below.

XML Sitemaps for Google Indexing

Search Engine Optimisation is all about optimising your website to ensure that it ranks highly for searches relevant to your searches. This usually relates to Organic traffic, whereby site visitors find you without the use of digital advertising due to your site content being highly relevant to their search terms.

In order to determine relevancy, Google uses a number of clever algorithms to match your website's content, both written and visual, to the search term(s) inputted by the potential site visitor. To learn what content you have on your website, Google employs the use of automated crawler bots, which continuously navigate the internet searching for content and noting when the content has been edited, updated or removed.

In additional to a Human Readable (plain text) map such as this page, good SEO practice includes generating an Extensible Markup Language (XML) sitemap and submitting it to Google's Webmaster program, Search Console.

Learn more about Google's requirements below.

Generating Your XML File & Submitting It To Google

- Prior to creating your sitemap, we recommend using a reputable SEO plugin such as Yoast or RankMath to set each of your pages to index or no-index. This is very important as there may be certain pages that you'd prefer not to be indexed such as Terms and Conditions and/or your Privacy policy. Once you've done this, we recommend using XMLSitemapGenerator.org to generate your machine-readable XML file as they have a number of settings to ensure adherence to best-practice.

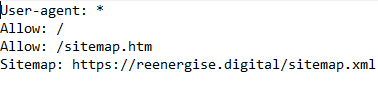

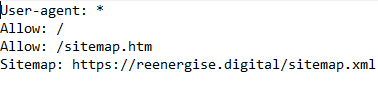

They also assist in the creation of a robots.txt file to inform search engines about which pages you'd like indexed and which to not index, as well as the location of your XML file. - Next, we recommend creating a Google Account specifically for your marketing efforts, (eg. xyzcompanymarketing@gmail.com). This helps hold all your Google services in one single account, you can also provide editor permissions to other email addresses, and easily link up your Analytics, Tag Manager and Google Search Console.

- Add Google Analytics or Tag Manager to your site, once you've got the UA or GTM snippet on your website, you'll be able to use that to verify your site on Google Search Console. Navigate to the Sitemap section on Google search console and upload your .XML file and Robots.txt file

Following the above process saves you waiting for crawler bots to revisit your site and informs Google about the changes therefore reducing the amount of time to index edits and new pages. Make sure to setup redirections for any deleted pages to minimise 404 pages across your website.

Generating Your XML File & Submitting It To Google

- Prior to creating your sitemap, we recommend using a reputable SEO plugin such as Yoast or RankMath to set each of your pages to index or no-index. This is very important as there may be certain pages that you'd prefer not to be indexed such as Terms and Conditions and/or your Privacy policy. Once you've done this, we recommend using XMLSitemapGenerator.org to generate your machine-readable XML file as they have a number of settings to ensure adherence to best-practice.

They also assist in the creation of a robots.txt file to inform search engines about which pages you'd like indexed and which to not index, as well as the location of your XML file. - Next, we recommend creating a Google Account specifically for your marketing efforts, (eg. xyzcompanymarketing@gmail.com). This helps hold all your Google services in one single account, you can also provide editor permissions to other email addresses, and easily link up your Analytics, Tag Manager and Google Search Console.

- Add Google Analytics or Tag Manager to your site, once you've got the UA or GTM snippet on your website, you'll be able to use that to verify your site on Google Search Console. Navigate to the Sitemap section on Google search console and upload your .XML file and Robots.txt file

Following the above process saves you waiting for crawler bots to revisit your site and informs Google about the changes therefore reducing the amount of time to index edits and new pages. Make sure to setup redirections for any deleted pages to minimise 404 pages across your website.